Introduction

MapLibre Native is a community led fork of Mapbox GL Native. It’s a C++ library that powers vector maps in native applications on multiple platforms by taking stylesheets that conform to the MapLibre Style Specification, a fork of the Mapbox Style Spec. Since it is derived from Mapbox’s original work it also uses Mapbox Vector Tile Specification as its choice of vector tile format.

This documentation is intended for developers of MapLibre Native. If you are interested in using MapLibre Native, check out the main README.md on GitHub.

Platforms

This page describes the platforms that MapLibre Native is available on.

Overview

MapLibre Native uses a monorepo. Source code for all platforms lives in maplibre/maplibre-native on GitHub.

| Platform | Source | Notes |

|---|---|---|

| Android | platform/android | Integrates with the C++ core via JNI. |

| iOS | platform/ios, platform/darwin | Integrates with the C++ core via Objective-C++. |

| Linux | platform/linux | Used for development. Also widely used in production for raster tile generation. |

| Windows | platform/windows | |

| macOS | platform/macos, platform/darwin | Mainly used for development. There is some legacy AppKit code. |

| Node.js | platform/node | Uses NAN. Available as @maplibre/maplibre-gl-native on npm. |

| Qt | maplibre/maplibre-qt, platform/qt | Only platform that partially split to another repository. |

Of these, Android and iOS are considered core projects of the MapLibre Organization.

GLFW

You can find an app that uses GLFW in platform/glfw. It works on macOS, Linux and Windows. The app shows an interactive map that can be interacted with. Since GLFW adds relatively little complexity this app is used a lot for development. You can also learn about the C++ API by studying the source code of the GLFW app.

Rendering Backends

Originally the project only supported OpenGL 2.0. In 2023, the renderer was modularized allowing for the implementation of alternate rendering backends. The first alternate rendering backend that was implemented was Metal, followed by Vulkan. In the future other rendering backends could be implemented such as WebGPU.

What platforms support which rendering backend can be found below.

| Platform | OpenGL ES 3.0 | Vulkan 1.0 | Metal | WebGPU |

|---|---|---|---|---|

| Android | ✅ | ✅ | ❌ | ❌ |

| iOS | ❌ | ❌ | ✅ | ❌ |

| Linux | ✅ | ✅ | ❌ | ✅ |

| Windows | ✅ | ❌ | ❌ | ❓ |

| macOS | ❌ | ✅ | ✅1 | ✅ |

| Node.js | ✅ | ❌ | ✅2 | ❌ |

| Qt | ✅ | ❌ | ❌ | ❌ |

Build Tooling

In 2023 we co-opted Bazel as a build tool (generator), mostly due to it having better support for iOS compared to CMake. Some platforms can use CMake as well as Bazel.

| Platform | CMake | Bazel |

|---|---|---|

| Android | ✅ (via Gradle) | ❌ |

| iOS | ✅3 | ✅ |

| Linux | ✅ | ✅ |

| Windows | ✅ | ✅ |

| macOS | ✅ | ✅ |

| Node.js | ✅ | ❌ |

| Qt | ✅ | ❌ |

-

Requires MoltenVK. Only available when built via CMake. ↩

MapLibre Android Developer Guide

These instructions are for developers interested in making code-level contributions to MapLibre Native for Android.

Getting the source

Clone the git repository and pull in submodules:

git clone git@github.com:maplibre/maplibre-native.git

cd maplibre-native

git submodule update --init --recursive

cd platform/android

Requirements

Android Studio needs to be installed.

Open the platform/android directory to get started.

Setting an API Key

The test application (used for development purposes) uses MapTiler vector tiles, which require a MapTiler account and API key.

With the first Gradle invocation, Gradle will take the value of the MLN_API_KEY environment variable and save it to MapLibreAndroidTestApp/src/main/res/values/developer-config.xml. If the environment variable wasn’t set, you can edit developer-config.xml manually and add your API key to the api_key resource.

Running the TestApp

Run the configuration for the MapLibreAndroidTestApp module and select a device or emulator to deploy on.

Kotlin

All new code should be written in Kotlin.

Style Checking

To check Kotlin style, we use ktlint. This linter is based on the official Kotlin coding conventions. We intergrate with it using the kotlinder Gradle plugin.

To check the style of all Kotlin source files, use:

$ ./gradlew checkStyle

To format all Kotlin source files, use:

$ ./gradlew formatKotlin

Profiling

See Tracy Profiling to understand how Tracy can be used for profiling.

MapLibre Android Tests

Render Tests

To run the render tests for Android, run the configuration for the androidRenderTest.app module.

Filtering Render Tests

You can filter the tests to run by passing a flag to the file platform/android/src/test/render_test_runner.cpp:

std::vector<std::string> arguments = {..., "-f", "background-color/literal"};

Viewing the Results

Once the application quits, use the Device Explorer to navigate to /data/data/org.maplibre.render_test_runner/files.

Double click android-render-test-runner-style.html. Right click on the opened tab and select Open In > Browser. You should see that a single render test passed.

Alternatively to download (and open) the results from the command line, use:

filename=android-render-test-runner-style.html

adb shell "run-as org.maplibre.render_test_runner cat files/metrics/$filename" > $filename

open $filename

For Vulkan use

filename=android-vulkan-render-test-runner-style.html

Updating the Render Tests

Now let’s edit metrics/integration/render-tests/background-color/literal/style.json, change this line:

"background-color": "red"

to

"background-color": "yellow"

We need to make sure that the new data.zip with the data for the render tests is installed on the device. You can use the following commands:

tar chf render-test/android/app/src/main/assets/data.zip --format=zip --files-from=render-test/android/app/src/main/assets/to_zip.txt

adb push render-test/android/app/src/main/assets/data.zip /data/local/tmp/data.zip

adb shell chmod 777 /data/local/tmp/data.zip

adb shell "run-as org.maplibre.render_test_runner unzip -o /data/local/tmp/data.zip -d files"

Rerun the render test app and reload the Device Explorer. When you re-open the HTML file with the results you should now see a failing test:

Now download the actual.png in metrics/integration/render-tests/background-color/literal with the Device Explorer. Replace the corresponding expected.png on your local file system. Upload the new render test data again and run the test app once more.

Of we don’t want to commit this change. But know you can add and debug (Android) render tests.

Adding Alternate Expected Images

You can add alternate expected images by coping the actual.png to a file that starts with expected. You can use the following command:

render_test_name=tile-lod/zoom-shift

adb exec-out run-as org.maplibre.render_test_runner cat files/metrics/integration/render-tests/$render_test_name/actual.png > metrics/integration/render-tests/$render_test_name/expected-android.png

Which will pull tile-lod/zoom-shift/actual.png and save it as tile-lod/zoom-shift/expected-android.png.

Instrumentation Tests

To run the instrumentation tests, choose the “Instrumentation Tests” run configuration.

Your device needs remain unlocked for the duration of the tests.

C++ Unit Tests

There is a separate Gradle project that contains a test app which runs the C++ Unit Tests. It does not depend on the Android platform implementations.

You can find the project in test/android. You can open this project in Android Studio and run the C++ Tests on an Android device or Simulator.

To run a particular set of tests you can modify the --gtest_filter flag in platform/android/src/test/test_runner.cpp. See the GoogleTest documentation for details how to use this flag.

AWS Device Farm

The instrumentation tests and C++ unit tests are running on AWS Device Farm. To see the results and the logs, go to:

Use the following login details (this is a read-only account):

| Alias | maplibre |

| Username | maplibre |

| Password | maplibre |

Documentation for MapLibre Android

API Documentation

We use Dokka for the MapLibre Android API documentation. The live documentation site can be found here.

Examples Documentation

The documentation site with examples uses MkDocs along with Material for MkDocs. You can check out the site here.

Building

To build the Examples Documentation you need to have Docker installed.

From platform/android, run:

make mkdocs

Next, visit http://localhost:8000/maplibre-native/android/examples/.

Snippets

We use a Markdown extension for snippets. This way code can be referenced instead of copy pasted into the documentation. This avoids code examples from becoming out of date or failing to compile. The syntax is as follows:

// --8<-- [start:fun]

fun double(x: Int): Int {

return 2 * x

}

// --8<-- [end:fun]

Next, you’ll be able to reference that piece of code in Markdown like so:

--8<-- "example.kt:fun"

Where example.kt is the path to the file.

Static Assets

Static assets are ideally uploaded to the MapLibre Native S3 Bucket.

Please open an issue with the ARN of your AWS account to get upload privileges.

You can use the macro {{ s3_url("filename.example") }} which will use a CDN instead of linking to the S3 bucket directly.

Benchmark

We have created a rendering performance benchmark for Android. The logic for this benchmark is encapsulated in BenchMarkActivity.kt.

Styles

We have hardcoded various styles, which you can override with a developer-config.xml by adding some XML with the following structure:

<array name="benchmark_style_names">

<item>Americana</item>

</array>

<array name="benchmark_style_urls">

<item>https://americanamap.org/style.json</item>

</array>

Retrieving Results

Results are logged to the console as they come in. After the benchmark is done running a benchmark_results.json file is generated. You can pull it off the device with for example adb:

adb shell "run-as org.maplibre.android.testapp cat files/benchmark_results.json" \

> benchmark_results.json

Results

The benchmark_results.json containing the benchmark results will have the following structure.

{

"results": [

{

"styleName": "AWS Open Data Standard Light",

"syncRendering": true,

"thermalState": 0,

"fps": 34.70237085812839,

"avgEncodingTime": 19.818289053808947,

"avgRenderingTime": 7.7432454899654255,

"low1pEncodingTime": 91.72538138784371,

"low1pRenderingTime": 26.573758581509203

},

],

"deviceManufacturer": "samsung",

"model": "SM-G973F",

"renderer": "drawable",

"debugBuild": true,

"gitRevision": "fc95b79880223e34c2ce80339f698d095e3d63cd",

"timestamp": 1736454325393

}

The meaning of the keys is as follows.

| Key | Description |

|---|---|

| styleName | Name of the style being benchmarked |

| syncRendering | Whether synchronous rendering was used |

| thermalState | Thermal state of the device during benchmark |

| fps | Average frames per second achieved during the benchmark |

| avgEncodingTime | Average time taken for encoding in milliseconds |

| avgRenderingTime | Average time taken for rendering in milliseconds |

| low1pEncodingTime | 1st percentile (worst case) encoding time in milliseconds |

| low1pRenderingTime | 1st percentile (worst case) rendering time in milliseconds |

| deviceManufacturer | Manufacturer of the test device |

| model | Model number/name of the test device |

| renderer | Type of renderer used (drawable for Open GL ES, vulkan for Vulkan, legacy for the legacy Open GL ES rendering backend) |

| debugBuild | Whether the build was a debug build |

| gitRevision | Git commit hash of the code version |

| timestamp | Unix timestamp of when the benchmark was run |

Large Scale Benchmarks on AWS Device Farm

Sometimes we do a large scale benchmark across a variety of devices on AWS Device Farm. We ran one such test in November 2024 to compare the performance of the then new Vulkan rendering backend against the OpenGL ES backend. There are some scripts in the repo to kick off the tests and to collect and plot the results:

scripts/aws-device-farm/aws-device-farm-run.sh

scripts/aws-device-farm/collect-benchmark-outputs.mjs

scripts/aws-device-farm/update-benchmark-db.mjs

scripts/aws-device-farm/plot-android-benchmark-results.py

Continuous Benchmarking

We are running the Android benchmark on every merge with main.

You can find the results per commit here or pull them from our public S3 bucket:

aws s3 sync s3://maplibre-native/android-benchmark-render/ .

Benchmarks in Pull Request

To run the benchmarks (for Android) include the following line on a PR comment:

!benchmark android

A file with the benchmark results will be added to the workflow summary, which you can compare with the previous results in the bucket.

Release MapLibre Android

We make MapLibre Android releases as a downloadable asset on GitHub as well as to Maven Central. Specifically we make use of a Sonatype OSSHR repository provided by Maven Central.

Also see the current release policy.

Making a release

To make an Android release, do the following:

-

Prepare a PR.

-

Update

CHANGELOG.mdin a PR, see for example this PR. The changelog should contain links to all relevant PRs for Android since the last release. You can use the script below with a GitHub access token with thepublic_reposcope. You will need to filter out PRs that do not relate to Android and categorize PRs as features or bugfixes.GITHUB_ACCESS_TOKEN=... node scripts/generate-changelog.mjs androidThe heading in the changelog must match

## <VERSION>exactly, or it will not be picked up. For example, for version 9.6.0:## 9.6.0 -

Update

android/VERSIONwith the new version.

-

-

Once the PR is merged, the

android-release.ymlworkflow will run automatically to make the release.

MapLibre iOS Developer Guide

Bazel

Bazel is used for building on iOS.

You can generate an Xcode project thanks to rules_xcodeproj intergration.

You need to install bazelisk, which is a wrapper around Bazel which ensures that the version specified in .bazelversion is used.

brew install bazelisk

Creating config.bzl

Configure Bazel, otherwise the default config will get used.

cp platform/darwin/bazel/example_config.bzl platform/darwin/bazel/config.bzl

You need to set your BUNDLE_ID_PREFIX to be unique (ideally use a domain that you own in reverse domain name notation).

You can keep leave the APPLE_MOBILE_PROVISIONING_PROFILE_NAME alone.

Set the Team ID to the Team ID of your Apple Developer Account (paid or unpaid both work). If you do not know your Team ID, go to your Apple Developer account, log in, and scroll down to find your Team ID.

If you don’t already have a developer account, continue this guide and let Xcode generate a provisioning profile for you. You will need to update the Team ID later once a certificate is generated.

Create the Xcode Project

Run the following commands:

bazel run //platform/ios:xcodeproj --@rules_xcodeproj//xcodeproj:extra_common_flags="--//:renderer=metal"

xed platform/ios/MapLibre.xcodeproj

Then once in Xcode, click on “MapLibre” on the left, then “App” under Targets, then “Signing & Capabilities” in the tabbed menu. Confirm that no errors are shown.

Try to run the example App in the simulator and on a device to confirm your setup works.

Important

The Bazel configuration files are the source of truth of the build configuration. All changes to the build settings need to be done through Bazel, not in Xcode.

Troubleshooting

Provisioning Profiles

If you get a Python KeyError when processing provisioning profiles, you probably have some really old or corrupted profiles.

Have a look through ~/Library/MobileDevice/Provisioning\ Profiles and remove any expired profiles. Removing all profiles here can also resolve some issues.

Cleaning Bazel environments

You should almost never have to do this, but sometimes problems can be solved with:

bazel clean --expunge

Using Bazel from the Command Line

It is also possible to build and run the test application in a simulator from the command line without opening Xcode.

bazel run //platform/ios:App --//:renderer=metal

You can also build targets from the command line. For example, if you want to build your own XCFramework, see the ‘Build XCFramework’ step in the iOS CI workflow.

CMake

It is also possible to generate an Xcode project using CMake. As of February 2025, targets mbgl-core, ios-sdk-static and app (Objective-C development app) are supported.

cmake --preset ios-metal -DDEVELOPMENT_TEAM_ID=YOUR_TEAM_ID

xed build-ios/MapLibre\ Native.xcodeproj

Distribution

MapLibre iOS is distributed as an XCFramework via the maplibre/maplibre-gl-native-distribution repository. See Release MapLibre iOS for the release process. Refer to the ios-ci.yml workflow for an up-to-date recipe for building an XCFramework. As of February 2025 we use:

bazel build --compilation_mode=opt --features=dead_strip,thin_lto --objc_enable_binary_stripping \

--apple_generate_dsym --output_groups=+dsyms --//:renderer=metal //platform/ios:MapLibre.dynamic --embed_label=maplibre_ios_"$(cat VERSION)"

iOS Tests

iOS Unit Tests

To run the iOS unit tests via XCTest, run tests for the ios_test target in Xcode

or use the following bazel command:

bazel test //platform/ios/test:ios_test --test_output=errors --//:renderer=metal

Render Tests

To run the render tests, run the RenderTest target from iOS.

When running in a simulator, use

# check for 'DataContainer' of the app with `*.maplibre.RenderTestApp` id

xcrun simctl listapps booted

to get the data directory of the render test app. This allows you to inspect test results. When adding new tests, the generated expectations and actual.png file can be copied into the source directory from here.

C++ Unit Tests

Run the tests from the CppUnitTests target in Xcode to run the C++ Unit Tests on iOS.

iOS Documentation

We use DocC for the MapLibre iOS documentation. The live documentation site can be found here.

Resources

You need to have aws-cli installed to download the resources from S3 (see below). Run the following command:

aws s3 sync --no-sign-request "s3://maplibre-native/ios-documentation-resources" "platform/ios/MapLibre.docc/Resources"

Then, to build the documentation locally, run the following command:

platform/ios/scripts/docc.sh preview

Resources like images should not be checked in but should be uploaded to the S3 Bucket. You can share a .zip with all files that should be added in the PR.

If you want to get direct access you need an AWS account to get permissions to upload files. Create an account and authenticate with aws-cli. Share the account ARN that you can get with

aws sts get-caller-identity

Examples

The code samples in the documentation should ideally be compiled on CI so they do not go out of date.

Fence your example code with

// #-example-code(LineTapMap)

...

// #-end-example-code

Prefix your documentation code block with

<!-- include-example(LineTapMap) -->

```swift

...

```

Then the code block will be updated when you run:

node scripts/update-ios-examples.mjs

Release MapLibre iOS

We make iOS releases to GitHub (a downloadable XCFramework), the Swift Package Index and CocoaPods. Everyone with write access to the repository is able to make releases using the instructions below.

Also see the current release policy.

Making a release

-

Prepare a PR, see this PR as an example.

- Update the changelog. The changelog should contain links to all relevant PRs for iOS since the last release. You can use the script below with a GitHub access token with the

public_reposcope. You will need to filter out PRs that do not relate to iOS.

The heading in the changelog must matchGITHUB_ACCESS_TOKEN=... node scripts/generate-changelog.mjs ios## <VERSION>exactly, or it will not be picked up. For example, for version 6.0.0:## 6.0.0 - Update the

VERSIONfile inplatform/ios/VERSIONwith the version to be released. We use semantic versioning, so any breaking changes require a major version bump. Use a minor version bump when functionality has been added in a backward compatible manner, and a patch version bump when the release contains only backward compatible bug fixes.

- Update the changelog. The changelog should contain links to all relevant PRs for iOS since the last release. You can use the script below with a GitHub access token with the

-

Once the PR is merged the

ios-ci.ymlworkflow will detect that theVERSIONfile is changed, and a release will be made automatically.

Pre-release

Run the ios-ci workflow. You can use the GitHub CLI:

gh workflow run ios-ci.yml -f release=pre --ref main

Or run the workflow from the Actions tab on GitHub.

The items under the ## main heading in platform/ios/CHANGELOG.md will be used as changelog for the pre-release.

Development Apps

There are two iOS apps available in the repo that you can use for MapLibre Native development. One Objective-C based app and a Swift based app.

Objective-C App

This app is available as “App” in the generated Xcode project.

The source code lives in platform/ios/app.

You can also build and run it from the command line with:

bazel run --//:renderer=metal //platform/ios:App

Swift App

The Swift App is mainly used to demo usage patterns in the example documentation.

This app is available as “MapLibreApp” in the generated Xcode project.

The source code lives in platform/ios/swift-app.

You can also build and run it from the command line with:

bazel run --//:renderer=metal //platform/ios/app-swift:MapLibreApp

macOS

MapLibre Native can be built for macOS. This is mostly used for development.

Note

There are some AppKit APIs for macOS the source tree. However those are not actively maintained. There is an discussion on whether we should remove this code.

File Structure

| Path | Description |

|---|---|

platform/darwin | Shared code between macOS and iOS |

platform/darwin/core | iOS/macOS specific implementations for interfaces part of the MapLibre Native C++ Core |

platform/macos | macOS specific code |

platform/macos/app | AppKit based example app |

Getting Started

Clone the repo:

git clone --recurse-submodules git@github.com:maplibre/maplibre-native.git

Make sure the following Homebrew packages are installed:

brew install bazelisk webp libuv webp icu4c jpeg-turbo glfw libuv

brew link icu4c --force

You can get started building the project for macOS using either Bazel or CMake.

Bazel

Configure Bazel (optional):

cp platform/darwin/bazel/example_config.bzl platform/darwin/bazel/config.bzl

Run the GLFW app with a style of your choice:

bazel run --//:renderer=metal //platform/glfw:glfw_app -- --style https://sgx.geodatenzentrum.de/gdz_basemapworld_vektor/styles/bm_web_wld_col.json

Create and open Xcode project:

bazel run //platform/macos:xcodeproj --@rules_xcodeproj//xcodeproj:extra_common_flags="--//:renderer=metal"

xed platform/macos/MapLibre.xcodeproj

CMake

Configure CMake:

cmake --preset macos-metal

Build and run the render tests:

cmake --build build-macos-metal --target mbgl-render-test-runner

build-macos-metal/mbgl-render-test-runner --manifestPath=metrics/macos-xcode11-release-style.json

Build and run the C++ Tests:

cmake --build build-macos-metal --target mbgl-test-runner

npm install && node test/storage/server.js # required test server

# in another terminal

build-macos-metal/mbgl-test-runner

Create and open an Xcode project with CMake:

cmake --preset macos-metal-xcode

xed build-macos-metal-xcode/MapLibre\ Native.xcodeproj

Configure project for Vulkan (make sure MoltenVK is installed):

cmake --preset macos-vulkan

Build and run mbgl-render (simple command line utility for rendering maps):

cmake --build build-macos-vulkan --target mbgl-render

build-macos-vulkan/bin/mbgl-render -z 7 -x -74 -y 41 --style https://americanamap.org/style.json

open out.png

Linux

This guide explains how to get started building and running MapLibre Native on Linux. The guide focusses on a Ubuntu 22.04 or later. The build process should give you a set of .a files that you can use to include MapLibre Native in other C++ projects, as well as a set of executables that you can run to render map tile images and test the project.

Clone the repo

First, clone the repository. This repository uses git submodules, that are required to build the project.

git clone --recurse-submodules -j8 https://github.com/maplibre/maplibre-native.git

cd maplibre-native

Requirements

# Install build tools

apt install build-essential clang cmake ccache ninja-build pkg-config

# Install system dependencies

apt install libcurl4-openssl-dev libglfw3-dev libuv1-dev libpng-dev libicu-dev libjpeg-turbo8-dev libwebp-dev xvfb

Optional: libsqlite3-dev (when not found will use SQLite as vendored dependency).

When using Wayland (now default for linux-opengl preset): libegl1-mesa-dev.

Build with CMake

cmake --preset linux-opengl

cmake --build build-linux-opengl --target mbgl-render

Running mbgl-render

Running mbgl-render --style https://raw.githubusercontent.com/maplibre/demotiles/gh-pages/style.json should produce a map tile image with the default MapLibre styling from the MapLibre demo.

./build-linux-opengl/bin/mbgl-render --style https://raw.githubusercontent.com/maplibre/demotiles/gh-pages/style.json --output out.png

xdg-open out.png

Headless rendering

If you run mbgl-render inside a Docker or on a remote headless server, you will likely get this error because there is no X server running in the container.

Error: Failed to open X display.

You’ll need to simulate an X server to do any rendering. Install xvfb and xauth and run the following command:

xvfb-run -a ./build-linux-opengl/bin/mbgl-render --style https://raw.githubusercontent.com/maplibre/demotiles/gh-pages/style.json --output out.png

Using your own style/tiles

You can also use the mbgl-render command to render images from your own style or tile set. To do so, you will need a data source and a style JSON file.

For the purposes of this exercise, you can use the zurich_switzerland.mbtiles from here, and this following style.json file. Download both by running the commands below.

wget https://github.com/acalcutt/tileserver-gl/releases/download/test_data/zurich_switzerland.mbtiles

wget https://gist.githubusercontent.com/louwers/d7607270cbd6e3faa05222a09bcb8f7d/raw/4e9532e1760717865df8aeff08f9bcf100f9e8c4/style.json

Note that this style is totally inadequate for any real use beyond testing your custom setup. Replace the source URL mbtiles:///path/to/zurich_switzerland.mbtiles with the actual path to your .mbtiles file. You can use this command if you downloaded both files to the working directory:

sed -i "s#/path/to#$PWD#" style.json

Next, run the following command.

./build-linux-opengl/bin/mbgl-render --style style.json --output out.png

This should produce an out.png image in your current directory with a barebones image of the world.

Running the render tests

Tip

For more information on the render tests see the dedicated Render Tests article.

To check that the output of the rendering is correct, we compare actual rendered PNGs for simple styles with expected PNGs. The content of the tests used to be stored in the MapLibre GL JS repository, which means that GL JS and Native are mostly pixel-identical in their rendering.

The directory structure of the render tests looks like:

metrics/

integration/

render-tests/

<name-of-style-spec-feature>/

<name-of-feature-value>/

expected.png

style.json

After the render test run, the folder will also contain an actual.png file and a diff.png which is the difference between the expected and the actual image. There is a pixel difference threshold value which is used to decide if a render test passed or failed.

Run all render tests with:

./build-linux-opengl/mbgl-render-test-runner --manifestPath metrics/linux-clang8-release-style.json

Or a single test with:

./build-linux-opengl/mbgl-render-test-runner --manifestPath metrics/linux-clang8-release-style.json --filter "render-tests/fill-visibility/visible"

The render test results are summarized in a HTML website located next to the manifest file. For example, running metrics/linux-clang8-release-style.json produces a summary at metrics/linux-clang8-release-style.html.

Building with Docker

These steps will allow you to compile code as described here using a Docker container. All the steps should be executed from the root of the repository.

Important

Not all platform builds are currently supported. Docker builds are a work in progress.

Important

You cannot build MapLibre native using both Docker and host methods at the same time. If you want to switch, you need to clean the repository first, e.g. by using this command:

git clean -dxfi -e .idea -e .clwb -e .vscode

Build Docker Image

You must build your own Docker image, specific with your user and group IDs to ensure file permissions stay correct.

# Build docker image from the repo __root__

# Specifying USER_UID and USER_GID allows container to create files with the same owner as the host user,

# and avoids having to pass -u $(id -u):$(id -g) to docker run.

docker build \

-t maplibre-native-image \

--build-arg USER_UID=$(id -u) \

--build-arg USER_GID=$(id -g) \

-f docker/Dockerfile \

docker

Run Docker Container

# Run all build commands using the docker container.

# You can also execute build commands from inside the docker container by starting it without the build command.

docker run --rm -it -v "$PWD:/app/" -v "$PWD/docker/.cache:/home/ubuntu/.cache" maplibre-native-image

You can also use the container to run just one specific commands, e.g. cmake or bazel. Any downloaded dependencies will be cached in the docker/.cache directory.

docker run --rm -it -v "$PWD:/app/" -v "$PWD/docker/.cache:/home/ubuntu/.cache" maplibre-native-image cmake ...

Windows

This guide explains how to build MapLibre Native in Windows.

The files produced by building mbgl-core target can be reused as libraries in other projects.

Some targets are executables (test runners and GLFW) and Node targets are shared libraries to be used in NodeJS applications.

Building with Microsoft Visual Studio and MSYS2 are supported.

Building with Microsoft Visual Studio

Prerequisites

The build was tested with Microsoft Visual Studio 2022. Earlier versions are not guaranteed to work, but Microsoft Visual Studio 2019 might work as well.

To install the required Visual Studio components, open Visual Studio Installer and check Desktop Development with C++ option. Make sure C++ CMake tools for Windows is selected in the right pane. If git is not already installed, select Git for Windows option in Individual Components. When Visual Studio finishes the install process, everything is ready to start.

Downloading sources

Open x64 Native Tools Command Prompt for VS 2022 and then clone the repository:

git clone --config core.longpaths=true --depth 1 --recurse-submodules -j8 https://github.com/maplibre/maplibre-native.git

cd maplibre-native

Note

The

core.longpaths=trueconfig is necessary, because without it a lot ofFilename too longmessages will come. If you have this configuration set globally (git config --system core.longpaths=true), you can omit the--config core.longpaths=trueportion of the clone command.

Configuring

Configure the build with the following command, replacing <preset> with opengl, egl or vulkan, which are the rendering engines you can use. If you don’t know which one to choose, just use opengl:

cmake --preset windows-<preset>

It will take some time to build and install all components on which Maplibre depends.

Building

Finally, build the project with the following command, again replacing <preset> with the value you choose in the configure step:

cmake --build build-windows-<preset>

Building with Microsoft Visual Studio

Just add the -G "Microsoft Visual Studio 17 2022" (or the corresponding Visual Studio version you have) option from the configure command:

cmake --preset windows-<preset> -G "Microsoft Windows 2022"

Once configure is done, open the file build-windows-<preset>\Mapbox GL Native.sln. Build the target ALL_BUILD to build all targets, or pick a specific target. Don’t forget to pick a build configuration (Release, RelWithDebInfo, MinSizeRel or Debug), otherwise the project will be built with default configuration (Debug).

Testing

If all went well and target mbgl-render or ALL_BUILD was chosen, there should now be a build-windows-<preset>\bin\mbgl-render.exe binary that you can run to generate map tile images. To test that it is working properly, run the following command.

.\build-windows-<preset>\bin\mbgl-render.exe --style https://raw.githubusercontent.com/maplibre/demotiles/gh-pages/style.json --output out.png

This should produce an out.png map tile image with the default MapLibre styling from the MapLibre demo.

Using your own style/tiles

You can also use the mbgl-render command to render images from your own style or tile set. To do so, you will need a data source and a style JSON file.

For the purposes of this exercise, you can use the zurich_switzerland.mbtiles from here, and the following style.json file.

{

"version": 8,

"name": "Test style",

"center": [

8.54806714892635,

47.37180823552663

],

"sources": {

"test": {

"type": "vector",

"url": "mbtiles:///path/to/zurich_switzerland.mbtiles"

}

},

"layers": [

{

"id": "background",

"type": "background",

"paint": {

"background-color": "hsl(47, 26%, 88%)"

}

},

{

"id": "water",

"type": "fill",

"source": "test",

"source-layer": "water",

"filter": [

"==",

"$type",

"Polygon"

],

"paint": {

"fill-color": "hsl(205, 56%, 73%)"

}

},

{

"id": "admin_country",

"type": "line",

"source": "test",

"source-layer": "boundary",

"filter": [

"all",

[

"<=",

"admin_level",

2

],

[

"==",

"$type",

"LineString"

]

],

"layout": {

"line-cap": "round",

"line-join": "round"

},

"paint": {

"line-color": "hsla(0, 8%, 22%, 0.51)",

"line-width": {

"base": 1.3,

"stops": [

[

3,

0.5

],

[

22,

15

]

]

}

}

}

]

}

Note that this style is totally inadequate for any real use beyond testing your custom setup. Don’t forget to replace the source URL "mbtiles:///path/to/zurich_switzerland.mbtiles" with the actual path to your mbtiles file.

From your maplibre-native dir, run the following command.

.\build-windows-<preset>\bin\mbgl-render.exe --style path\to\style.json --output out.png

This should produce an out.png image in your current directory with a barebones image of the world.

Building with MSYS2

Prerequisites

You must have MSYS2 installed. Then launch an window, which can be UCRT64, CLANG64, CLANGARM64 or MINGW64.

You need to install the required packages:

pacman -S --needed \

git \

${MINGW_PACKAGE_PREFIX}-toolchain \

${MINGW_PACKAGE_PREFIX}-clang \

${MINGW_PACKAGE_PREFIX}-cmake \

${MINGW_PACKAGE_PREFIX}-angleproject \

${MINGW_PACKAGE_PREFIX}-curl-winssl \

${MINGW_PACKAGE_PREFIX}-dlfcn \

${MINGW_PACKAGE_PREFIX}-glfw \

${MINGW_PACKAGE_PREFIX}-icu \

${MINGW_PACKAGE_PREFIX}-libjpeg-turbo \

${MINGW_PACKAGE_PREFIX}-libpng \

${MINGW_PACKAGE_PREFIX}-libwebp \

${MINGW_PACKAGE_PREFIX}-libuv

Then everything is ready to start.

Downloading sources

Just clone the repository:

git clone --depth 1 --recurse-submodules -j8 https://github.com/maplibre/maplibre-native.git

cd maplibre-native

Configuring

Configure the build with the following command, replacing <preset> with opengl, egl or vulkan, which are the rendering engines you can use. If you don’t know which one to choose, just use opengl:

cmake --preset windows-<preset> -DCMAKE_C_COMPILER=clang -DCMAKE_CXX_COMPILER=clang++

Building

Finally, build the project with the following command, again replacing <preset> with the value you choose in the configure step:

cmake --build build-windows-<preset>

Testing

If all went well and target mbgl-render or ALL_BUILD was chosen, there should now be a build-windows-<preset>/bin/mbgl-render.exe binary that you can run to generate map tile images. To test that it is working properly, run the following command.

./build-windows-<preset>/bin/mbgl-render.exe --style https://raw.githubusercontent.com/maplibre/demotiles/gh-pages/style.json --output out.png

This should produce an out.png map tile image with the default MapLibre styling from the MapLibre demo.

Using your own style/tiles

You can also use the mbgl-render command to render images from your own style or tile set. To do so, you will need a data source and a style JSON file.

For the purposes of this exercise, you can use the zurich_switzerland.mbtiles from here, and the following style.json file.

{

"version": 8,

"name": "Test style",

"center": [

8.54806714892635,

47.37180823552663

],

"sources": {

"test": {

"type": "vector",

"url": "mbtiles:///path/to/zurich_switzerland.mbtiles"

}

},

"layers": [

{

"id": "background",

"type": "background",

"paint": {

"background-color": "hsl(47, 26%, 88%)"

}

},

{

"id": "water",

"type": "fill",

"source": "test",

"source-layer": "water",

"filter": [

"==",

"$type",

"Polygon"

],

"paint": {

"fill-color": "hsl(205, 56%, 73%)"

}

},

{

"id": "admin_country",

"type": "line",

"source": "test",

"source-layer": "boundary",

"filter": [

"all",

[

"<=",

"admin_level",

2

],

[

"==",

"$type",

"LineString"

]

],

"layout": {

"line-cap": "round",

"line-join": "round"

},

"paint": {

"line-color": "hsla(0, 8%, 22%, 0.51)",

"line-width": {

"base": 1.3,

"stops": [

[

3,

0.5

],

[

22,

15

]

]

}

}

}

]

}

Note that this style is totally inadequate for any real use beyond testing your custom setup. Don’t forget to replace the source URL "mbtiles:///path/to/zurich_switzerland.mbtiles" with the actual path to your mbtiles file.

From your maplibre-native/ dir, run the following command.

./build-windows-<preset>/bin/mbgl-render.exe --style path/to/style.json --output out.png

This should produce an out.png image in your current directory with a barebones image of the world.

Release Policy

Note

The following release policiy applies specifically to MapLibre Android and MapLibre iOS.

- We use semantic versioning. Breaking changes will always result in a major release.

- Despite having extensive tests in place, as a FOSS project we have limited QA testing capabilities. When major changes took place we may opt to put out a pre-release to let the community help with testing.

- In principle the

mainbranch should always be in a releasable state. - The release process is automated and documented (see Release MapLibre iOS and Release MapLibre Android). Anyone with write access should be able to push out a release.

- There is no fixed release cadence, but you are welcome to request a release on any of the communication channels.

- We do not have long-term support (LTS) releases.

- If you need a feature or a bugfix ported to and old version of MapLibre, you need to do the backporting yourself (see steps below).

Backporting

We understand that MapLibre is used in large mission critical applications where updating to the latest version is not always immediately possible. We do not have the capacity to offer LTS releases, but we do want to facilitate backporting.

- Create an issue and request that a branch is created from the release you want to target. Also mention the feature or bugfix you want to backport.

- Once the branch is created, make a PR that includes the feature or bugfix and that targets this branch. Also update the relevant changelog.

- When the PR is approved and merged, a release is attempted. If the release workflow significantly changed and the release fails, you may need to help to backport changes to the release workflow as well.

The branch names for older versions follow a pattern as follows: platform-x.x.x (e.g. android-10.x.x for the MapLibre Native Android 10.x.x release series). These branches have some minimal branch protection (a pull request is required to push changes to them).

Render Tests

Note

See also Android Tests and iOS Tests for some platform-specific information on the render tests.

Render tests verify the correctness and consistency of MapLibre Native’s rendering. Note that ‘render test’ is a bit of a misnomer, because there are various types of tests that do not really test rendering behavior that we sometimes call render tests. Examples are expression tests and query tests. In addition, these ‘render tests’ allow a wide variety of operations and probes (which write out metrics) for things like GPU memory allocations, memory usage, network requests, FPS, so these tests are really quite a bit more versatile than just verifying rendering behavior.

Render Test Runner CLI Options

When using CMake, the render test runner is an executable available as mbgl-render-test-runner in the build directory.

| Option / Argument | Description | Required? |

|---|---|---|

-h, --help | Display the help menu and exit. | No |

-r, --recycle-map | Toggle reusing the map object between tests. If set, the map object is reused; otherwise, it’s reset for each test. | No |

-s, --shuffle | Toggle shuffling the order of tests based on the manifest file. | No |

-o, --online | Toggle online mode. If set, tests can make network requests. By default (--online not specified), tests run in offline mode, forcing resource loading from the cache only. | No |

--seed <uint32_t> | Set the seed for shuffling tests. Only relevant if --shuffle is also used. Defaults to 1 if --shuffle is used without --seed. | No |

-p, --manifestPath <string> | Specifies the path to the test manifest JSON file, which defines test configurations, paths, and potentially filters/ignores. | Yes |

-f, --filter <string> | Provides a regular expression used to filter which tests (based on their path/ID) should be run. Only tests matching the regex will be executed. | No |

-u, --update default | platform | metrics | rebaseline | Sets the mode for updating test expectation results: - default: Updates generic render test expectation images/JSON. - platform: Updates platform-specific render test expectation images/JSON. - metrics: Updates expected metrics for the configuration defined by the manifest. - rebaseline: Updates or creates expected metrics for the configuration defined by the manifest. | No |

Source Code Organization

render-test

This directory contains the C++ source code for common render test runner, manifest parser and CLI tool.

render-test/android: standalone Gradle project with a app that runs the render test runner.render-tests/ios: source code for Objective-C app that encapsulates the render test runner. property:background-color,line-width, etc., with a second level of directories below that for individual tests.

metrics

The JSON files in this directory are the manifests (to be passed to render test CLI tool). This directory also contains many directories that store metrics used by the tests. Other files/directories include:

cache-style.db,cache-metrics.db: pre-populated cache (SQLite database file) so the tests can run offline. You may need to update this database when you need a new resource available during test executation.binary-size: binary-size checks. Not used right now, see #3379.expectations: expectations for various platforms. E.g.expectations/platform-androidis referenced by theandroid-render-test-runner-metrics.jsonmanifest under itsexpectation_pathskey.ignores: contains JSON files with as key a test path and as value a reason why a test is ignored. For example:

Manifests can have a key{ "expression-tests/collator/accent-equals-de": "Locale-specific behavior changes based on platform." }ignore_pathswith an array of ignore files. For example:{ ... "ignore_paths": [ "ignores/platform-all.json", "ignores/platform-linux.json", "ignores/platform-android.json" ], ... }

metrics/intergration

data,geojson,glyphs,image,sprites,styles,tiles,tilesets,video: various data used by the render tests.expression-tests: tests that verify the behavior of expressions of the MapLibre Style Spec.query-tests: these tests test the behavior of thequeryRenderedFeaturesAPI which, as the name suggests, allow you to query the rendered features.render-tests: location of render tests. Each render test contains astyle.jsonand anexpected.pngimage. This directory tree is generally organized by style specification properties.

Running tests

To run the entire integration test suite (both render or query tests), from within the maplibre-native directory on Linux run the command:

./build/mbgl-render-test-runner --manifestPath metrics/linux-clang8-release-style.json

Running specific tests

To run a subset of tests or an individual test, you can pass a specific subdirectory to the mbgl-render-test-runner executable. For example, to run all the tests for a given property, e.g. circle-radius:

$ build-macos-vulkan/mbgl-render-test-runner --manifestPath=metrics/macos-xcode11-release-style.json -f 'circle-radius/.*'

* passed query-tests/circle-radius/feature-state

* passed query-tests/circle-radius/zoom-and-property-function

* passed query-tests/circle-radius/property-function

* passed query-tests/circle-radius/outside

* passed query-tests/circle-radius/tile-boundary

* passed query-tests/circle-radius/inside

* passed query-tests/circle-radius/multiple-layers

* passed render-tests/circle-radius/zoom-and-property-function

* passed render-tests/circle-radius/literal

* passed render-tests/circle-radius/property-function

* passed render-tests/circle-radius/default

* passed render-tests/circle-radius/function

* passed render-tests/circle-radius/antimeridian

13 passed (100.0%)

Results at: /Users/bart/src/maplibre-native/metrics/macos-xcode11-release-style.html

Writing new tests

To add a new render test:

-

Create a new directory

test/integration/render-tests/<property-name>/<new-test-name> -

Create a new

style.jsonfile within that directory, specifying the map to load. Feel free to copy & modify one of the existingstyle.jsonfiles from therender-testssubdirectories. -

Generate an

expected.pngimage from the given style with$ ./build/mbgl-render-test-runner --update default --manifestPath=... -f '<property-name>/<new-test-name>' -

Manually inspect

expected.pngto verify it looks as expected. -

Commit the new

style.jsonandexpected.png.

Note

These notes are partially outdated since the renderer modularization.

Design

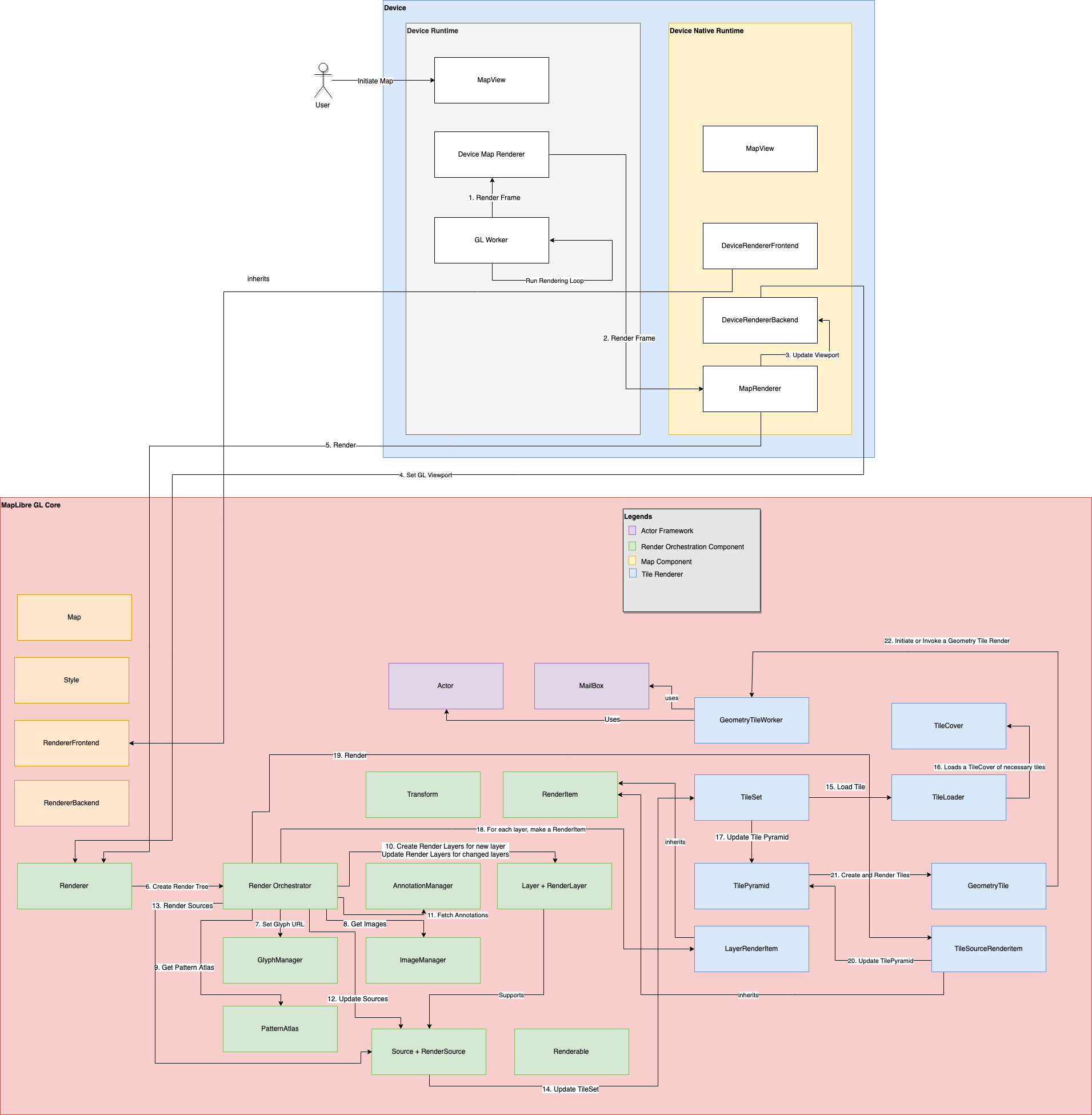

This section is dedicated to documenting current state of MapLibre Native as of end 2022. Architectural Problems and Recommendations section notes recommendations for future improvements from an architectural perspective.

Ten Thousand Foot View

graph TD

subgraph Platform

MV[Map View]

MR[Map Renderer]

end

subgraph "MapLibre Native Core"

M[Map]

S[Style]

L[Layers]

I[Images]

Glyphs

R[Renderer]

TW[TileWorker]

end

%% Platform Interactions

MV -- initializes --> MR

MV -- Initializes --> M

%% Core Interactions

MR -- runs the rendering loop --> R

R -- Renders Map --> M

L -- Fetches --> S

L -- Fetches --> I

R -- Sends messages to generate tiles --> TW

TW -- Prepares layers to be rendered --> L

L -- Fetches --> Glyphs

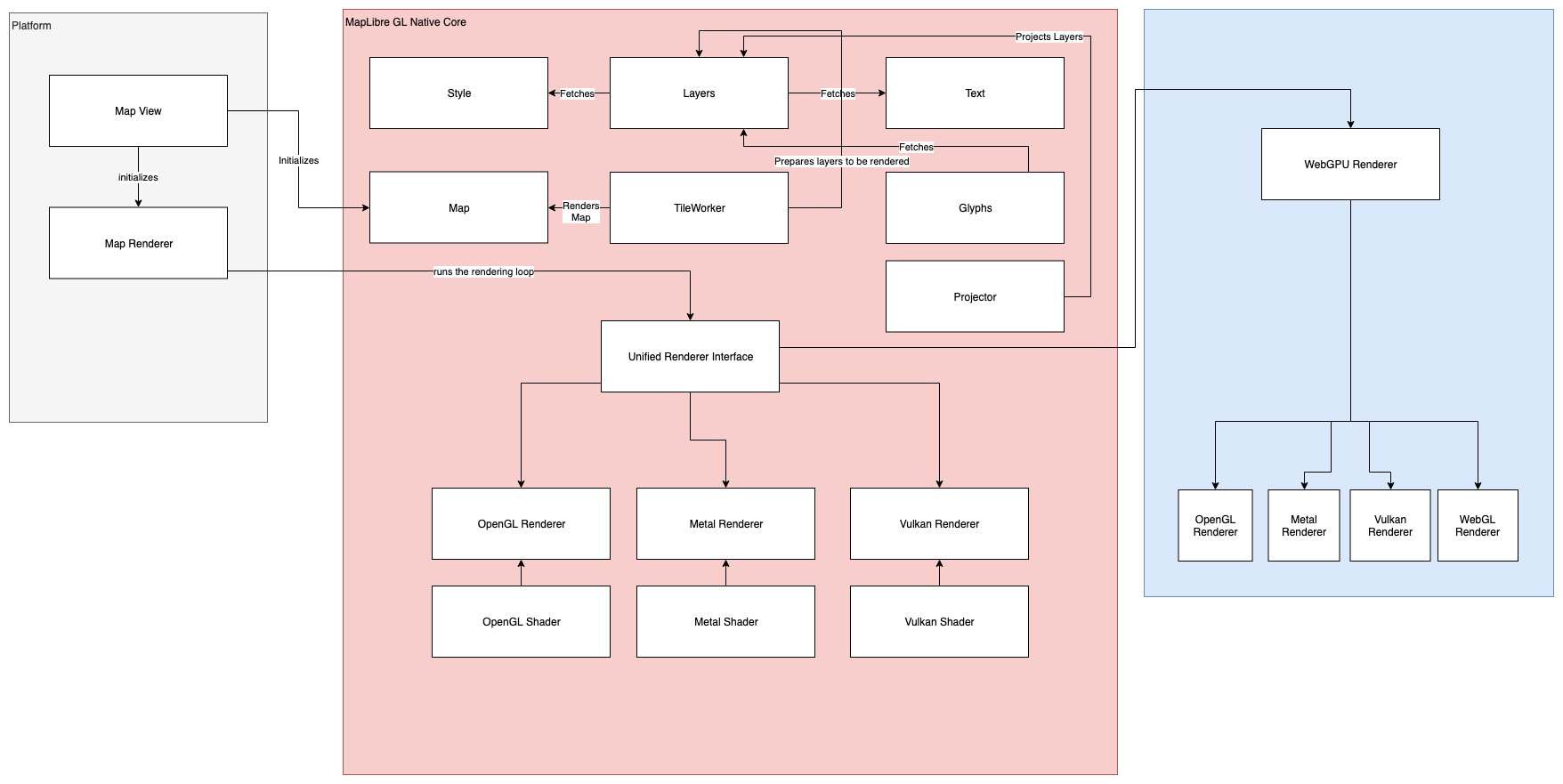

Figure 1: MapLibre Native Components – Ten Thousand Foot view

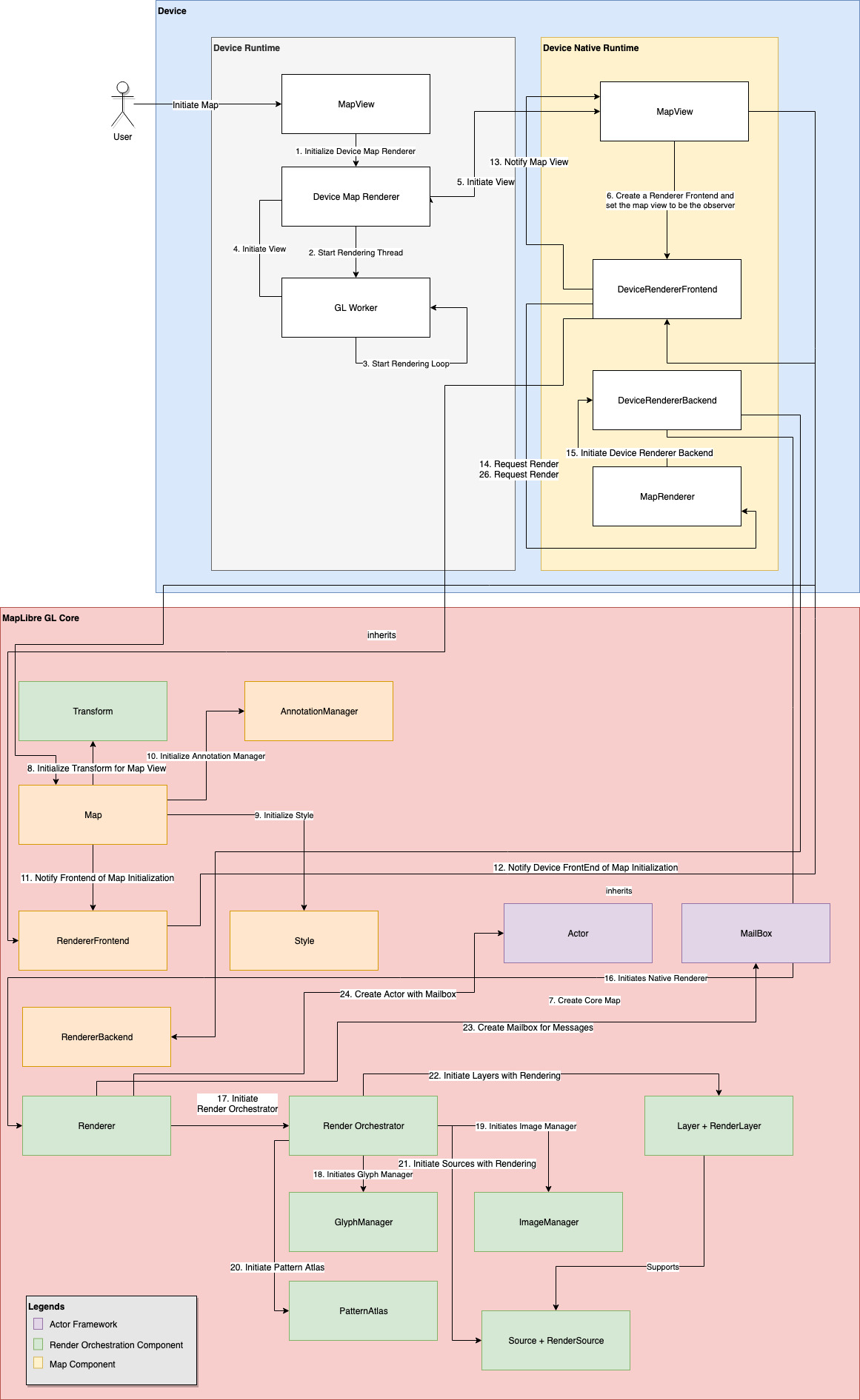

From ten thousand foot, MapLibre Native is composed of Map View and a Renderer. MapLibre Native supports iOS, Android, Linux, QT, MacOS, and nodeJS at the time of writing. Each of these platforms have their own Map View and Map Renderer component. A Map Renderer talks to a shared codebase that renders map by using device GPU. This shared piece of code at the time of writing supports OpenGL as its choice of rendering API.

Apart from the platform code, MapLibre Native offers shared implementation for Style, Layers, Text, Glyphs, and Tiles.

To summarize:

-

Map View is responsible for viewing a slippy map, a term referring to web maps which lets a user zoom and pan around. Each platform has its own Map View.

-

Map Renderer is composed of two parts, one is a platform specific map rendering loop. The other is a cross-platform renderer backend, denoted as Renderer in Figure 1.

-

A map renderer uses a Tile Worker to render individual map tile. It uses an Actor Framework to send messages to a Tile Worker to produce said tiles.

-

A tile worker prepares a tile one layer at a time. A map tile is composed of multiple layers.

-

A Layer requires Style, Glyphs, and Sprites for to be ready for rendering. Features rendered in a Layer come from data sources. And a Layer is composed of tiles produced from said features.

We will look a bit more into these components going forward1.

Map View

A Map View is a reusable map viewer that renders a raster or vector map in different platform specific viewports. It bears the standard of common map configuration across the platforms. These ranges from map viewport size, pixel ratio, tileset URL, style, collision configuration for text, and annotations2. A Map View does not contain any rendering capability of its own. It publishes and listens to events through observers3.

Figure 1 does not display the explicit use of observers for brevity.

Observers

Observers are a group of components that listen and publish events from and to a Map View. Observer come in two major flavours across platforms – a map observer and a rendering observer. A map observer is responsible for handling events for map configuration changes. This includes events for loading and updating style, events for map becoming idle, events initialization and completion of rendering, and events for camera orientation changes. On the other hand, a rendering observer deals with events that are directly related to frame-by-frame map rendering. A rendering observer might publish a rendering event to map observers but they are usually rendering initialization or completion events. One key thing to remember here is a map configuration can lead to rendering changes such as camera switch, map center change, viewport size change, zoom, and pitch change.

Style

Style component embodies a MapLibre Style Document. A style document is a JSON object that defines the visual appearance of a map through the order to draw it in, what to draw, and how to style the data while drawing it. Style sub-component is composed of classes and functions that allows downloading, and configuring a style. A style can be fetched from a remote HTTP resource or configured locally. The style component also carries a Sprite Loader to load sprites, remote HTTP URL for glyphs, layers to draw and sources of data to draw. A sprite is a single image that contains all the icons included in a style. Layers are composed of sources where a source could be a vector tile set, raster tile set, or GeoJSON data

To complete initialization of a map component, we need to initialize a map, style with source and layers, and observers.

Layer

Layer is an overloaded terminology in the MapLibre Native’s context. Layer can mean any of the following:

-

From the point of view of data that needs to be rendered on map, each map tile is composed of layers of data. Each layer, in this context, contains features4 to be rendered in a map. These features are defined by a source. Each layer is tied to a source.

-

From the point of view of style, a style’s layer property list all the layers available in that style. A single style sheet can be applied atop one or many layers. This layer definition converges data to be rendered and the style to be applied on said layer.

When this document uses the word layer in context of rendering, it refers to the definition mentioned in 2.

Glyphs

A glyph is a single representation of a character. A font is a map of characters5. Map tiles use labels of text to show name of cities, administrative boundaries, or street names. Map tiles also need to show icons for amenities like bus stops and parks. A map style uses character map from fonts to display labels and icons. Collectively these are called glyphs.

Glyphs require resizing, rotation, and a halo for clarity in nearly every interaction with the map. To achieve this, all glyphs are pre-rendered in a shared texture, called texture atlas. This atlas is packed inside protobuf container. Each element of the atlas is an individual texture representing the SDF of the character to render.

Each glyph bitmap inside is a field of floats, named signed distance. It

represents how a glyph should be drawn by the GPU. Each glyph is of font

size 24 that stores the distance to the next outline in every pixel. Easily

put if the pixel is inside the glyph outline it has a value between 192-255.

Every pixel outside the glyph outline has a value between 0-191. This creates

a black and white atlas of all the glyphs inside.

This document currently does not have a dedicated section on text rendering. When it does, we will dive more into glyph rendering.

Actor Framework

MapLibre Native is used in mobile platforms. To be performant in underpowered environments, MapLibre Native tries to leverage message passing across threads to render frames asynchronously. The threading architecture in implementation realizes this using the Actor interface6. In reality the messages are raw pointers. This ranges from raw message to actionable message. By actionable message this document means anonymous functions that are passed as messages between actors. These arbitrary messages are immutable by design. The current implementation of the Actor framework is done through two major components, a MailBox and an Actor. A MailBox is attached to a specific type of Message. In the current implementation, these are rendering events that render layers, sources, and subsequently tiles. An Actor is an independent thread passing messages to others.

Renderer

A Map Renderer translates geospatial features in a vector or raster tile to rendered or rasterized map tiles shown in a slippy map. MapLibre Native uses a Renderer component to translate map tiles fetched from a tile server to a rendered map.

MapLibre Native uses a pool of workers. These workers are responsible for background tile generation. A render thread continuously renders the current state of the map with available tiles at the time of rendering. In Javascript and iOS, the render thread is the same as the foreground/UI. For performance reasons, Android render thread is separated from the UI thread. The changes to the map on the UI due to user interaction is batched together and sent to the render thread for processing. The platforms also include worker threads for processing for platform-specific tasks such as running HTTP requests in the background. But the core code is agnostic about where those tasks get performed. Each platform is required to provide its own implementation of concurrency/threading primitives for MapLibre Native core to use. The platform code is also free to use its own threading model. For example, Android uses a GLSurfaceView with a GLThread where the iOS SDK uses Grand Central Dispatch for running asynchronous tasks.

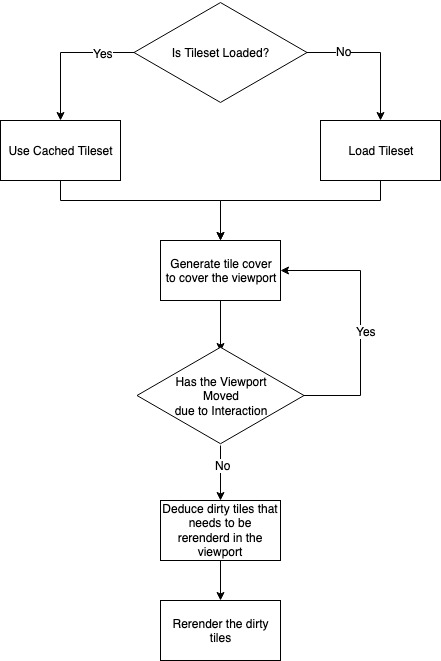

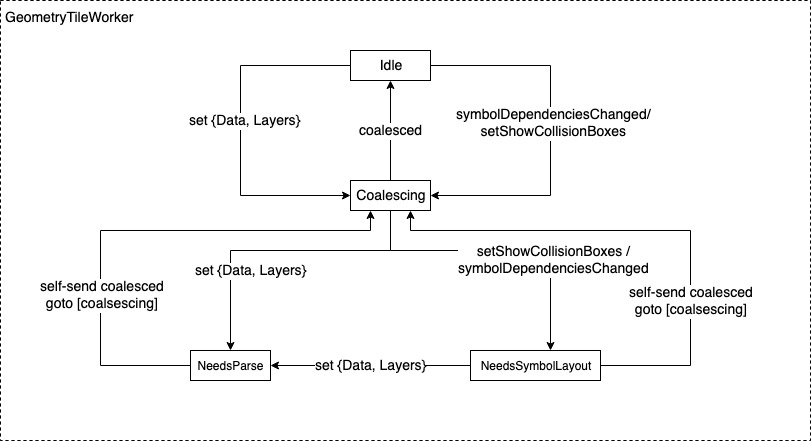

Tile Worker

We have noted early in this document that MapLibre Native uses message passing to communicate with the renderer. These messages are immutable by design and the render loops checks for these in each iteration. To simplify, there is only one thread allocated for rendering loop, background or foreground7. A Tile Worker is a thread that prepares a tile of a specific type. Geometry Tile Worker by the name of it, prepares a tile for rendering tiles that contains vector geometry. Following that same trend, MapLibre Native offers tile worker for raster tiles and elevation tiles. Messages sent to a tile worker can be processed by any thread, with the assumption that only one thread at a time will work with a tile worker instance.

Tile workers are not based on a common interface or base class. Tiles are. MapLibre Native offers a base Tile class. Raster, Geometry, and Elevation tile instances are inherited from Tile base class. Through this inheritance MapLibre Native maintains the association of Tile types and tile workers. Any Tile Worker is an actor that accepts messages of a specific Tile type. For a Geometry Tile Worker, the type is a Geometry Tile.

*To read in depth about the workflow of a Geometry Tile Worker, please check Geometry Tile Worker chapter.

-

To read in depth about the data flow for map initialization and rendering in Android, please check Android Map Rendering Data Flow ↩

-

This document speaks of a simplified configuration for brevity. These also includes viewport mode, constrain mode, and north orientation. ↩

-

Platform SDKs might use a wrapper map view class atop the map component. This is to help establish contract between the device runtime running a different language. Android is a good example of this behaviour. ↩

-

A feature is the spatial description of a real-world entity such as a road or a utility pole. Any geometry that is displayed on a map tile is either an individual feature or a part of a feature. ↩

-

This document used the term glyph and character interchangeably. In reality, a single character can have multiple glyphs. These are called alternates. On the other hand, a single glyph can represent multiple characters. These are called ligatures. ↩

-

In the Javascript counterpart, MapLibre GL JS, this is achieved through usage of webworkers. ↩

-

In iOS, the render thread runs on UI thread, as in it’s a foreground thread. Android offers wider range of devices in terms of battery capacity. Hence, Android prefers to use a background thread for rendering loop. ↩

Coordinate System

Before we jump into the coordinate system of MapLibre Native, let’s quickly review the concepts of translating a position on the face of Earth to a map tile. This is not a comprehensive refresher of coordinate reference systems or rendering basics. Rather this intends to guide the reader on what we want to achieve in this section.

We start from Earth, which is a geoid which is mathematically expensive to use as a reference coordinate system. Thus, we approximate the earth to reference ellipsoids or datum. For this documents’ scope, WGS84 is used as the canonical datum. Our goal is to represent geometries defined by WGS84 longitude, latitude pair coordinates to a map tile.

Instead of translating a full geometry, in the following subsections, we will project a WGS 84 point to a map tile rendered in MapLibre Native.

World vs Earth

This document uses the word Earth when it refers to the planet in which we all live in and make map tiles for. This document uses the word World to denote the world MapLibre Native renders. The word world in rendering terms mean the world to render. It could be a set of cones and boxes, a modeled city, anything composed of 3D objects. MapLibre Native renders map tiles in a range of zoom levels on a 3D plane. Map tiles are already produced from a WGS84 ellipsoid. Therefore, when this document uses the word World, it means the 3D plane containing a set of map tiles to be rendered, not the Earth.

Transformations

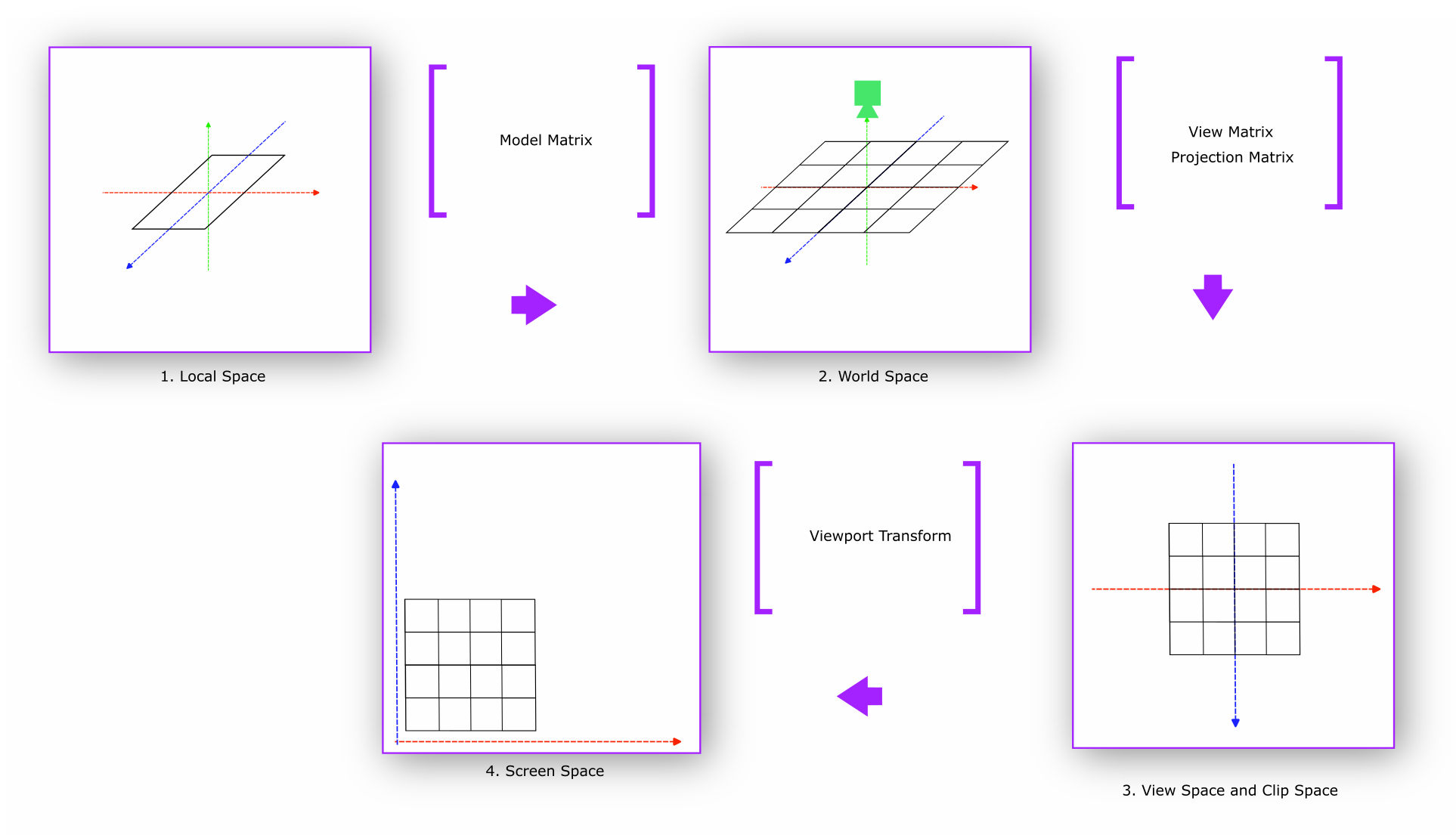

MapLibre Native requires a series of coordinate transformations to render a geometry from map tile. This is where we refresh our rendering knowledge a bit. To render anything through a GPU:

-

Design and define model in the Local Space. We call anything that needs to be renderer a model. In the Local space, a model lives in its own coordinate system. For MapLibre Native, in local space each individual tile is modeled. This map tile is already populated by a map tile generation process. The longitude, latitude bound per tile is now translated to pixel bounds in this local space.

-

When model matrix is applied to local space, as in a camera is applied, Local Space transforms to World Space. In this space, the model coordinates are relative to the world’s origin. In this space all the individual map tiles to be rendered are introduced in the world space.

-

The World Space is not seen from a viewpoint of a camera. When we see the world from a viewpoint of a camera, view matrix transformation is applied. Cameras can see the world up to a distance like human eyes can. To emulate that we apply Projection Matrix to View Space and end up in Clip Space1.

-

Finally, the World Space is transformed to device screen by applying viewport transform matrix.

Going forward, scoping our discussion only to MapLibre Native, we will talk mostly about two categories of coordinate systems:

-

World Coordinates

-

Device Coordinates

World Coordinates are defined in World Space. This speaks of how this world defines itself in a three-dimensional space.2 On the other hand, device coordinates are used through View, Clip, and Screen Space. The purpose of this set of coordinates is to define how the world will be projected on a screen. Device Coordinates define how a world will be projected on a screen.

The unit of measurement will be pixels in this document. When the map tiles are generated by a system, the unit of distance in each tile is measured in degree angles instead of meters. Because angular distance stays the same if we move the angle across the axis of earth.

Figure 2 shows rendering map tile through the rendering spaces and transformations below:

Figure 2: Rendering Spaces and Transformations for Map Tiles

Figure 2: Rendering Spaces and Transformations for Map Tiles

World Coordinates

World Coordinates for maps start with Geographic Coordinate Systems. Geographic Coordinate Systems use the three-dimensional model of the earth (ellipsoid) to define specific locations on the surface to create a grid. Traditional longitude, and latitude coordinate pair to define a location is an example of using geographic coordinates. EPSG: 4326 (WGS84) is the reference Geographic Coordinate System that most of the world’s geospatial data is defined and stored3 in. There is no way to visualize the WGS84 coordinate system on a two-dimensional plane, in this case, the map.

Projections are used to translate WGS84 coordinates to a plane. To be specific, projections are used to translate a location on the ellipsoid to a two-dimensional square. EPSG:38574 or projected Pseudo-Mercator Coordinate System is such a coordinate system. EPSG:3857 is used by MapLibre Native as a default coordinate system to display maps. This system takes WGS84 coordinates and projects them into sphere. This stretches out the landmass in the hemispheres but mathematically makes it simpler to project a location back to a 2D plane. As in, a sphere divided into angular equidistant grid produces rectangular grids when projected into a 2D plane. The philosophy behind this was to make rendering maps easy, as in drawing 2D squares on a plane is computationally trivial.

Our world can be now broken down into these squares or tiles as we will call it going forward. This system imagines the world as a giant grid of tiles. Each tile has a fixed size defined in pixels.

worldSize = tileSize * number of tiles across a single dimension

For brevity, this document assumes the reader knows that map tiles are divided into a range of zoom levels. And each tile in zoom N gets divided into 4 tiles in zoom N+1. A tile size of 512 pixels and zoom level 11 will deduce worldSize to be the following:

worldSize = 512 * 2^11 = 1048576

Although each tile breaks into 4 in the next zoom level, we used a power of 2. This is because X and Y both dimensions expand with a factor of 2.

Example: We start from translating a WGS84 location with longitude

-77.035915 and latitude 38.889814. To translate a degree longitude

relies on normalizing the latitude range [-180, 180] to [0, 1048576]. This means the X pixel value of a specific tile requires

shifting our coordinate by 180. For example, a location with longitude

-77.035915 becomes*:*

X = (180 + longitude) / 360 * worldSize

= (180 + -77.035915) / 360 * 1048576

= 299,904

Finding the X coordinate is easy to compute. But the Y requires a more than normalizing the range. This is due to the aforementioned space stretching in the hemispheres5. Latitude value (Y) defined in WGS84 will not be the same position after stretching. The computation looks like the following if the latitude was 38.889814:

y = ln(tan(45 + latitude / 2))

= ln(tan(45 + 38.889814/ 2))

= 0.73781742861

Now, to compute the pixel value for y:

Y = (180 - y * (180 / π)) / 360 * worldSize

= (180 - 42.27382˚) / 360 * 1048576

= 401,156

Tile Coordinates

Our next pursuit is to translate World Coordinates to Tile

Coordinates. Because we want to know where exactly inside a map tile a

location (longitude, latitude) coordinate gets rendered and vice versa.

This system creates different pixel ranges per zoom level. So, we append

the zoom level along with the X and Y pixel values. Dividing the pixel

values with the tile size normalizes the X and Y value per tile. This

means (x:299,904, y:401,156, z:11) becomes (585.7471, 783.5067, z11).

We divide our X and Y pixel value by tile size because we want to know

the starting coordinates of each tile instead of individual location

coordinates. This helps in drawing a tile. If we now floor the

components to integers, we get (585/783/11). This marks an individual

tile’s X, Y, and Z.

To reach our goal of translating a location to a coordinate inside a

tile, we need to know what is the extent of the tile. MapLibre

Native follows Mapbox Vector Tile (MVT) spec. Following said spec,

MapLibre Native internally normalizes each tile to an extent of

8192. Tile extent describes the width and height of the tile in integer

coordinates. This means a tile coordinate can have higher precision than

a pixel. Because normally a tile has a height and width of 512 pixels.

In this case, with an extent of 8192, each Tile Coordinate has a

precision of 512/8192 = 1/16th of a pixel. Tile extent origin (0,0)

is on top left corner of the tile, and the (Xmax, Ymax) is on the bottom

right. This means (8192, 8192) tile coordinate will be in the bottom

right. Any coordinate greater or lesser than the extent range is

considered outside the extent of the tile. Geometries that extend past

the tile’s area as defined by the extent are often used as a buffer

for rendering features that overlap multiple adjacent tiles.

To finally deduce the Tile Coordinates we multiply the remainder of our Tile components with extent:

(585.7471, 783.5067, z11) -> (.7471 * 8192, .5067 * 8192) = (x:

6120, y: 4151)

This makes the Tile to be (585/783/11) and Tile Coordinates

to be (x: 6120, y: 4151) for WGS84 location with longitude -77.035915,

and latitude 38.889814.

After defining Tile Coordinates, our pursuit continues to translate these coordinates to native device coordinates. To reiterate our progress in the perspective of rendering, we just defined our local space with tile coordinates. Local coordinates are the coordinates of the object to be rendered relative to its local origin6. In this case, the objects are the map tiles. The next step in traditional rendering workflow is to translate object coordinates to world coordinates. This is important to understand if we are to render multiple objects in the world. If we treat all tiles in a zoom level being rendered in a single 3D horizontal plane, then the World Space has only one object. And in MapLibre Native, this plane has an origin of (0,0), positioned on the top left.

Device Coordinates

World space for MapLibre Native contains a plane in 3D with all the tiles for any specific zoom level. Map tiles are hierarchical in nature. As in they have different zoom levels. MapLibre Native internally stores a tree object that mimics a tile pyramid. However, it does not create a hierarchy of 3D planes where each plane mimics one zoom level. It reuses the same 3D plane to re-render the tiles in the requested zoom level at a time.

The journey towards rendering the tiles in the device screen from the world space starts with View Matrix, as defined in the world space. The key part here is the camera. A view matrix is a matrix that scales, rotates, and translates7 the world space from the view of the camera. To add the perspective of the camera, we apply the Projection Matrix. In MapLibre Native, a camera initialization requires map center, bearing, and pitch.

Initializing the map with a center (lon, lat) does not translate or move the 3D plane with tiles, rather moves the camera atop the defined position named center. In the rendering world, this is not the center of the 3D plane we render tiles on, rather the position of the camera.

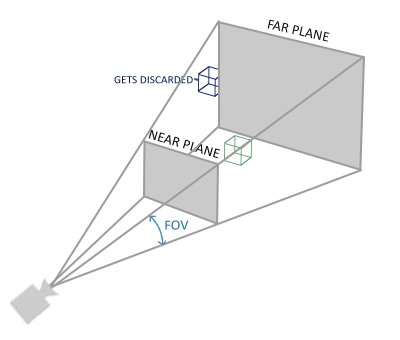

Figure 3: Perspective Frustum (Sourced from learnopengl.com)

Figure 3: Perspective Frustum (Sourced from learnopengl.com)

The benefit of tile coordinates continues here. The camera representation we use here, to be specific the view matrix, can directly take the tile coordinates to move the camera to a particular tile in a zoom level. Once a tile is built, the GPU can quickly draw the same tile with different bearing, pan, pitch, and zoom parameters8.

If we keep following Figure 2, we see we need to also add a projection

matrix. And MapLibre Native uses a perspective projection.

Perspective projection matrix introduces the sense of depth perspective

through the camera. As in objects further from the camera will look

smaller and objects closer to the camera will look bigger. This

perspective component is defined by parameter w. That is why the

shaders that MapLibre Native at the time of writing uses 4

dimensional vectors over 3 dimensional vectors. The 4^th^ dimension is

this parameter w. Therefore, theoretically a GL coordinate is of the

form (x, y, z, w).

Before we jump into the transformations, let’s revisit an example scenario:

zoom: 11.6

map center: (38.891, -77.0822)

bearing: -23.2 degrees

pitch: 45 degrees

tile: 585/783/11

On top of this, MapLibre Native uses a field of view of 36.87 degrees or 0.6435011087932844 radians. This is somewhat arbitrary. The altitude of the camera used to be defined as 1.5 screen heights above the ground. The ground is the 3D plane that paints the map tiles. The field of view is derived from the following formula:

fov = 2 * arctan((height / 2) / (height * 1.5))

Factoring only the transformations of zoom, map center, bearing, pitch, and tile in, with a viewport of 862 by 742 pixels, the projection matrix will look like9:

| x | y | z | w | |

|---|---|---|---|---|

| x | 0.224 | -0.079 | -0.026 | -0.026 |

| y | -0.096 | -0.184 | -0.062 | -0.061 |

| z | 0.000 | 0.108 | -0.036 | -0.036 |

| w | -503.244 | 1071.633 | 1469.955 | 1470.211 |

To use our tile coordinates, we will turn it to a 4D vector of (x,y,z,w) with neutral w value 1. For brevity we used z value of 0. For buildings and extrusions z value will not be 0. But this document does not cover that.

So, tile coordinate (x: 6120, y: 4151, z:0, w:1) will transform to the

following due to a vector multiplication with the projection matrix:

| x | y | z | w | |

|---|---|---|---|---|

| x = 6120 | 0.2240.224 * 6120 = 1370.88 | -0.079 * 6120 = -483.48 | -0.026 * 6120 = -159.12 | -0.026 * 6120 = -159.12 |

| z = 4151 | -0.096 * 4151 = -398.496 | -0.184 * 4151 = -763.784 | -0.062 * 4151 = -257.362 | -0.061 * 4151 = 253.311 |

| z = 0 | 0.000 * 0 = 0 | 0.108 * 0 = 0 | -0.036 * 0 = 0 | -0.036 * 0 = 0 |

| w = 1 | -503.244 * 1 = -503.244 | 1071.633 * 1 = 1071.633 | 1469.955 * 1 = 1469.955 | 1470.211 * 1 = 1470.211 |

| Final Vector | 469.14 | -175.631 | 1053.473 | 1057.78 |

The finalized vector is off from what we have expected with the result from the simulation. This is due to multiplying with low precision.

The final vector will be (x: 472.1721, y: -177.8471, z: 1052.9670, w: 1053.7176). This is not perspective normalized. Perspective

normalization happens when we divide all the components of this vector

with perspective component w.

(472.1721 / 1053.72, -177.8471 / 1053.72, 1052.9670 / 1053.72)

= (x: 0.4481, y: -0.1688, z: 0.9993)

Doing this will take us into clip space. Clip coordinates contain all

the tile coordinates we wish to render in MapLibre Native but only in

a normalized coordinate space of [-1.0, 1.0].

All that is left now is to translate this to viewport coordinates. Following Figure 2, we use viewport transform to produce these coordinates:

Pixel Coordinates: (NormalizedX * width + width / 2, height / 2 -

NormalizedY * height)

= (0.4481 * 862 + 431, 371 - (-0.1688 * 742))

= (x: 624, y: 434)

These are our viewport screen coordinates where our desired WGS84 location longitude -77.035915 and latitude 38.889814 will be rendered.

-

Clip coordinates are normalized to -1.0 to 1.0. For brevity, this document does not dive deep into 3D rendering basics. ↩

-

For brevity, this document is assuming the world we live in is three dimensional. ↩

-

For brevity, this document does not dive deep into reference ellipsoids to approximates earth, also known as Datums. EPSG:4326 or WGS84 is such a Datum or reference ellipsoid. ↩

-

There are other coordinate systems such as EPSG:54001 that uses equirectangles over squares to project the WGS84 coordinates. This document focuses on EPSG:3857 because MapLibre Native uses it by default. ↩

-

This document scopes out the trigonometric proof of this translation for brevity. To know more: https://en.wikipedia.org/wiki/Web_Mercator_projection ↩

-

For brevity, this document does not speak in depth of rendering basics in regards to coordinate systems. For more, please check: https://learnopengl.com/Getting-started/Coordinate-Systems ↩

-

Scale, rotate, and translate are common rendering transformation used to produce model, view, and projection matrices. These operations are applied right to left. As in translate first, rotate second, and scale last. Matrix multiplications are not commutative, so order of operation matters. ↩

-

The piece of code we run on GPU is called a shader. We will see more how shaders influence MapLibre Native rendering later in the document. ↩

-

Matrix and examples produced from Chris Loers work hosted in: https://chrisloer.github.io/mapbox-gl-coordinates/#11.8/38.895/-77.0757/40/60 ↩

Expressions

Expressions are domain specific language (DSL) built by Mapbox for vector styles. Mapbox Vector Style is used in Mapbox Vector Tiles. Mapbox Vector Tiles is a vector tile specification initiated by Mapbox which was later widely adopted by the geospatial community.

To recap, Mapbox Vector Styles have 2 significant parts - Sources and Layers. Sources define where the geospatial features to display the map are loaded from. They can be GeoJSON, Mapbox Vector Tiles (MVT) etc. We draw said features on map using Layers.